Demo applications up to DX-RT 3.1.0

DEEPX provides demo applications through the dx-app repository. These applications are included in the pre-built images provided by Embedded Artists, and can also be built into custom Yocto images by including the meta-ea-dx Yocto layer.

The way to run applications is version dependant. To check which version is being used, run dxrt-cli -s on target. The DXRT version shown on the first line like this. For 3.1.0 it looks like this:

DXRT v3.1.0

=======================================================

* Device 0: M1, Accelerator type

--------------------- Version ---------------------

This page is only for versions up to and including 3.1.0. For new versions visit Demo applications DX-RT 3.2.0.

Models and resources

To run the demo applications, compiled DNNX models as well as additional resources - such as sample videos and images - are required. These resources are not installed by default and must be downloaded separately by following the steps below.

- Download and unpack models.

The compiled models are downloaded from the DEEPX resource directory and unpacked into the directory: assets/models.

There are version dependencies between DEEPX software components, as described in the Version compatibility section. The example below downloads models that are compatible with dx-app version 2.1.0.

wget https://sdk.deepx.ai/res/models/models-2_1_0.tar.gz

mkdir -p assets/models

tar -xvf models-2_1_0.tar.gz -C assets/models/

- Download and unpack sample videos

Sample video files are downloaded from the DEEPX resource directory and unpacked into the directory: assets/videos.

wget https://sdk.deepx.ai/res/video/sample_videos.tar.gz

mkdir -p assets/videos

tar -xvf sample_videos.tar.gz -C assets/videos/

- Download sample images

Sample image files are downloaded from the dx-app GitHub repository and stored in the directory: assets/images.

mkdir -p assets/images

wget -P assets/images/ https://raw.githubusercontent.com/DEEPX-AI/dx_app/refs/heads/main/sample/ILSVRC2012/1.jpeg

wget -P assets/images/ https://raw.githubusercontent.com/DEEPX-AI/dx_app/refs/heads/main/sample/img/7.jpg

wget -P assets/images/ https://raw.githubusercontent.com/DEEPX-AI/dx_app/refs/heads/main/sample/img/8.jpg

Image classification

Image classification is a computer vision task in which a neural network analyzes an image and assigns it a single label from a predefined set of categories. The image classification demo demonstrates this functionality by running a trained model on a static image.

Run the following command to classify an image:

classification -m assets/models/EfficientNetB0_4.dxnn -i assets/images/1.jpeg

The output from the classification application will be similar to the example shown below. In this example, the model assigns the image class index 321.

modelPath: assets/models/EfficientNetB0_4.dxnn

imgFile: assets/images/1.jpeg

loopTest: 1

Top1 Result : class 321

The reported value represents a class index, which corresponds to an entry in the list of categories that the model was trained on. The list of categories is provided in a JSON file available in the dx-app repository.

In this JSON file, the first category is listed on row 37, which corresponds to class index 0. Using this offset, the category label for class index 321 can be determined by calculating: 321 + 37 = 358.

Row 358 in the JSON file contains the label "admiral", which corresponds to an Admiral butterfly.

Object detection

This section describes how to run object detection demos using YOLO-based models. Object detection is a computer vision task in which a neural network identifies and localizes multiple objects within an image or video by drawing bounding boxes around detected objects and assigning class labels.

Run the following command to perform object detection on a video stream. When a display is connected, the video output will be shown with bounding boxes overlaid on the detected objects.

yolo -m assets/models/YoloV7_PPU.dxnn -p 4 -v assets/videos/snowboard.mp4

When the demo finishes, a large amount of output will be printed to the console, including performance-related information. One key metric is the frames per second (fps) value, which indicates the runtime performance of the object detection pipeline. An example output is shown below.

Video file ended.

[DXAPP] [INFO] total time : 41379831 us

[DXAPP] [INFO] per frame time : 48397 us

[DXAPP] [INFO] fps : 20.6627

Using other models

The example above uses the YoloV7_PPU.dxnn model file together with the object detection parameter -p 4. There is a direct dependency between the selected model and the object detection parameter, as different YOLO model variants require different post-processing configurations.

Detailed information about the object detection parameter can be found in the yolo demo usage documentation:

yolo --help

object detection model demo application usage

Usage:

yolo [OPTION...]

-m, --model arg (* required) define dxnn model path

-p, --parameter arg (* required) define object detection parameter

0: yolov5s_320, 1: yolov5s_512, 2: yolov5s_640, 3:

yolov7_512, 4: yolov7_640, 5: yolov8_640, 6:

yolox_s_512, 7: yolov5s_face_640, 8: yolov3_512,

9: yolov4_416, 10: yolov9_640, 11: yolov5s_512_ppu

(optimized for PPU postprocessing), 12:

scrfd_face_640_ppu (optimized for PPU

postprocessing) (default: 0)

...

As an additional reference, the run_demo.sh script available in the dx-app repository lists the object detection parameters used for each supported model. This script can be used as a practical guide when selecting parameters for other models.

Pose estimation

This section describes how to run the pose estimation demo using the YOLOv5 Pose model. Pose estimation is a computer vision task used to identify and track key anatomical landmarks - such as joints or other keypoints - of a person or object within an image or video.

Run the following command to perform pose estimation on an image:

pose -m assets/models/YOLOV5Pose640_1.dxnn -i assets/images/7.jpg

The output from the pose estimation application will be similar to the example shown below. In this example, two persons are detected, and the estimated poses are saved to the file result.jpg. The resulting image, which includes the detected keypoints and skeletal overlays, is shown below.

YOLO created : 25500 boxes, 1 classes,

Detected 2 boxes.

BBOX:person(0) 0.908174, (52.9721, 212.993, 228.171, 448.604)

BBOX:person(0) 0.892166, (316.646, 232.729, 448.644, 428.188)

Result saved to result.jpg

[DXAPP] [INFO] total time : 304924 us

[DXAPP] [INFO] per frame time : 304924 us

[DXAPP] [INFO] fps : 3.28947

Segmentation

This section describes how to run the semantic segmentation demo using the DeepLabV3Plus model. Semantic segmentation is a computer vision task that assigns a class label to each pixel in an image, effectively partitioning the image into meaningful regions. This technique is commonly used for applications such as scene understanding and image analysis.

Run the following command to perform segmentation on an image:

segmentation -m assets/models/DeepLabV3PlusMobileNetV2_2.dxnn -i assets/images/8.jpg

The output from the segmentation application will be similar to the example shown below. In this example, the segmentation result is saved to the file result.jpg. The resulting image, which visualizes the segmented regions, is shown below.

modelpath: assets/models/DeepLabV3PlusMobileNetV2_2.dxnn

parameter: 0

imgFile: assets/images/8.jpg

videoFile:

cameraInput: 0

Result saved to result.jpg

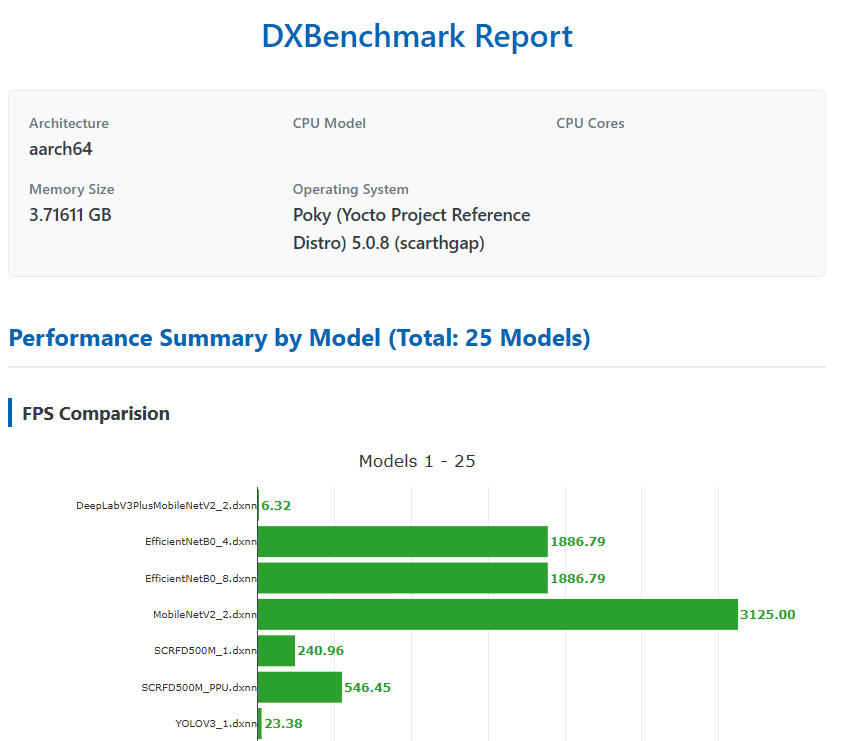

Benchmark

A benchmark tool named dxbenchmark is provided as part of the dx-rt repository. This tool can be used to quickly evaluate the inference performance of different models on the DX-M1 accelerator.

Run the following command to benchmark all models located in the assets/models directory. In this example, 100 inference loops are executed for each model:

dxbenchmark --dir assets/models/ -l 100

Depending on the number of models and the selected loop count, the benchmark may take several minutes to complete. Once finished, the benchmark results are generated in multiple formats, including:

- a JSON file

- a CSV file

- an HTML report

These reports provide detailed performance metrics for each model, such as inference time and throughput.

Below is a screenshot showing a portion of the generated HTML report.