Introduction

The DX-M1 is an AI accelerator module developed by DEEPX, designed to deliver high-performance neural network inference with low power consumption at the edge. Delivering up to 25 TOPS at just 5 Watts, it is optimized for embedded systems that require efficient execution of computer vision and AI workloads without relying on cloud resources.

Built around DEEPX’s proprietary neural processing architecture, the DX-M1 supports a wide range of modern deep learning models, enabling real-time inference for applications such as image classification, object detection, and sensor data processing.

This documentation describes how to use the DX-M1 with supported SoMs, covering setting up the hardware and running demo applications.

Integration options

The DX-M1 AI accelerator can be used in two supported configurations.

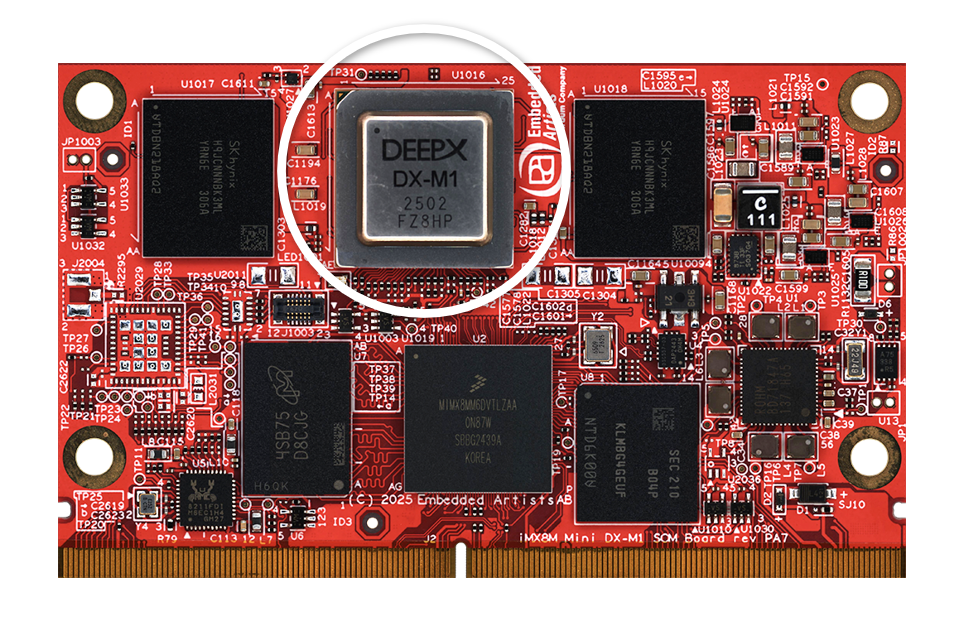

First, the DX-M1 is available as an onboard component on selected System-on-Modules (SoMs). In this configuration, the accelerator is directly integrated into the SoM hardware design, providing a compact solution.

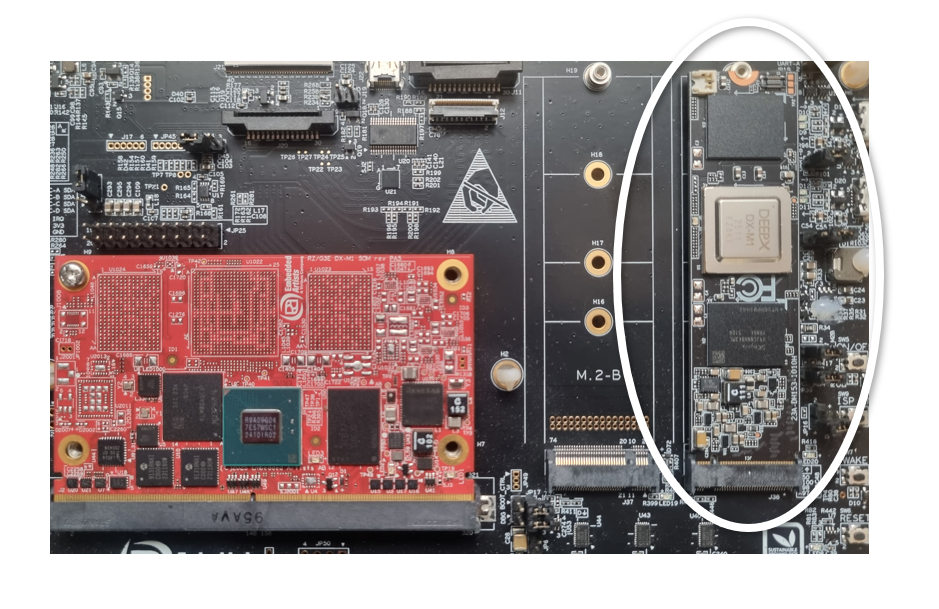

Alternatively, the DX-M1 is available as an M.2 module for use with carrier boards that provide an M.2 M-Key slot with PCIe connectivity, enabling flexible integration of the accelerator outside the SoM.

| Integrated on SoM | M.2 module on carrier board |

|---|---|

|  |